Introduction: The New Frontier of Content Visibility

You already know how to climb Google’s results, but the rules are shifting fast. Large Language Model Optimization (LLMO) focuses on getting your articles referenced by AI systems like ChatGPT, Perplexity, Gemini, and Claude. That matters, because people are starting their research inside chatbots instead of search boxes 35 percent of Americans who used an AI chatbot last year said they did so instead of a search engine.

Google sees the shift, too: its AI Overviews are rolling out to hundreds of millions of users this year, with a goal of reaching more than a billion by December, showcasing the importance of SEO and LLMO strategies.

So here’s the big question: if a buyer asks LLMs like ChatGPT for “the best cybersecurity marketing agency,” will your brand even show up in the response? Let’s break down how LLMO optimization compares with traditional SEO and how to get your content cited rather than skipped by the models powering tomorrow’s search.

What Is LLMO and Why Is It Gaining Momentum?

Defining LLMO in Simple Terms

LLMO optimization means structuring, writing, and distributing content so large language models (LLMs) can ingest and quote it accurately. Where SEO courts Google’s crawler, LLMO courts AI training data and retrieval pipelines to improve search results and optimize responses.

Why Traditional SEO Isn’t Enough Anymore

Generative AI is eating search, transforming how users engage with search queries. Adobe found AI search referrals to U.S. retail sites jumped 1,300 percent during the 2024 holiday season. Statista projects that 90 million Americans will rely on generative AI as their primary search tool by 2027, unlike traditional search methods. Those eyeballs will never see a blue‑link SERP.

Where LLMs Get Their Information

ChatGPT and Perplexity train or crawl:

- public web pages (especially those with clear HTML structure)

- PDFs on SlideShare, SSRN, and gov sites

- GitHub repos and README files

- community Q&As and forums

- datasets submitted to Hugging Face

If your insights live only behind heavy JavaScript or paywalls, generative AI systems may never learn they exist.

SEO vs. LLMO: A Practical Comparison

| Dimension | SEO (Search Engines) | LLMO Optimization (Large Language Models) |

| Primary Goal | Earn high positions on SERPs to capture clicks | Earn citations or quoted passages inside AI answers to capture attention |

| How Content Is Discovered | Crawlers follow links, read sitemaps, parse HTML | Model trainers ingest public web, PDFs, GitHub, transcripts; retrieval systems embed & semantically match text |

| Key Ranking Signals | Backlinks, domain authority, keyword relevance, dwell time, structured data | Presence of clear, factual statements, recency, domain trust, semantic match, training‑data prevalence |

| Update Frequency | Google indexes new pages in hours or days; algorithm tweaks roll out constantly | Retrieval layers refresh every few weeks; core model snapshots update every 3‑6 months |

| Result Format | Blue links, featured snippets, rich cards | Inline quotes, footnote‑style links, conversational summaries. |

| Optimization Levers | Title tags, meta descriptions, schema markup, internal linking, backlink outreach | Full‑sentence facts, tables/lists, FAQ blocks, syndication to AI‑crawled platforms, prompt‑based testing |

| Core Metrics | Organic sessions, click‑through rate, average position, backlink count | AI citations gained, referral traffic from chatbots, share‑of‑voice in AI answers, prompt‑level visibility |

| Typical Feedback Loop | Search Console data → adjust keywords/UX → re‑crawl → rank shift | Prompt tests in ChatGPT/Perplexity → refine statements/syndication → wait for retrieval refresh → visibility shift |

| Risk of Over‑Optimization | Keyword stuffing, manipulative link schemes → penalties | Repetitive phrasing, thin context → models ignore or truncate content |

| Tool Stack | Ahrefs, SEMrush, Screaming Frog, Surfer, Google Search Console | Perplexity.ai, AnswerSocrates, PromptLayer, Notion/GitHub for lightweight pages, SlideShare for PDF seeding |

| Strategic Role | Drive steady, intent‑rich traffic from search engines | Capture emerging “zero‑click” audience that relies on conversational AI for answers |

Key Differences in the Optimization Approach

- SEO leans on keywords and link equity.

- LLMO optimization Leans on plain‑language facts the model can lift verbatim, making it easier for AI researchers to analyze data.

- Your favorite SEO plugin can’t test whether Gemini will quote you manual prompt testing in the context of LLM outputs is required.

Common Pitfalls

Stuffing keywords like “best VPN tool” twenty times? Google might forgive you, but an LLM will treat it as spammy filler, highlighting the importance of quality AI content. Likewise, if you never state a fact in a full sentence, “87 percent of CISOs increased AI budgets in 2024” the model has nothing neat to copy, which can negatively affect search results.

How to Make Your Content AI‑Readable and LLM‑Friendly

Structure for Semantic Understanding

Use H2‑H4 headers that Answer a question effectively by utilizing AI tools to enhance user engagement.

Fact: The complete sentence gives the model a ready‑made pull quote.

Use Natural Language and Explicit Statements

Replace vague bullets with declarative lines:

“Our 2025 benchmark shows HubSpot CRM cut onboarding time by 42 percent for B2B SaaS firms.”

Include LLM‑Friendly Formats

- Readable Tables for better comprehension of side‑by‑side comparisons

- Numbered lists for step‑by‑step instructions

- FAQs with concise answers

- Original data Surveys and benchmarks that no one else owns can tip the scales in your favor when optimizing for LLMs and AI content.

Original research is a catnip for AI training pipelines, enhancing their ability to improve search results through generative AI optimization.

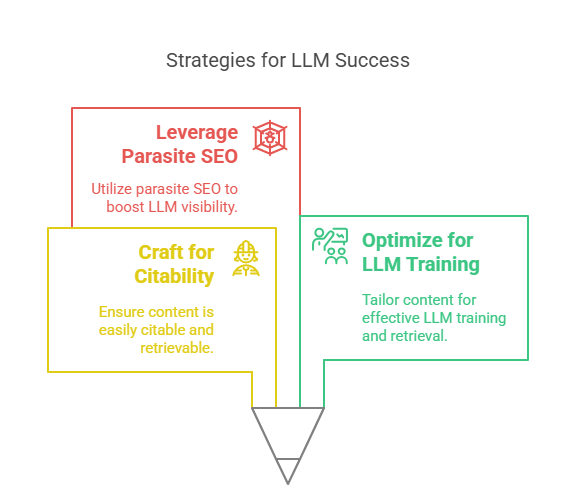

LLMO Content Creation Strategies for 2025

Craft for Citability

- Lead with the facts and follow with the context, especially in AI conversation formats.

- Attribute your own brand inside the sentence: “According to YourBrands 2025 study.”

Optimize for LLM Training and Retrieval

- Publish the long‑form piece on your domain.

- Repurpose core charts or stats on SlideShare, Medium, and GitHub READMEs LLMs crawl these heavily.

- Provide a lightweight HTML version (no gated pop‑ups, minimal JS) to optimize for LLMs and improve user experience.

Leverage Parasite SEO for LLM Visibility

Guest‑post your research on high‑authority domains (think Dev. to or HackerNoon). The domain authority accelerates crawl and inclusion in model refreshes.

The Rise of Generative Engine Optimization (GEO)

Generative Engine Optimization is the umbrella strategy for any system that returns synthesized answers. LLMO is the tactical playbook inside GEO. Google’s AI Overviews alone will expose GEO content to hundreds of millions of users in this year’s, and enterprise buyers are pouring $4.6 billion into generative AI apps that depend on reliable source data. Ignoring GEO now is like ignoring mobile search in 2012.

LLMO Audit Checklist

- Full‑sentence stats and takeaways

- Headers phrased as answers can enhance visibility in search engines like Google.

- Proprietary data or benchmarks

- Syndicated versions on AI‑crawled platforms

- Minimal fluff, maximal specificity

Run the checklist on your top 10 traffic pages before the quarter ends.

Tools and Tactics to Boost AI Discoverability

Tools

- Surfer SEO/NeuronWriter: NLP term coverage plus readability grading

- AlsoAsked or AnswerSocrates: surfaces question clusters LLMs love

- Perplexity.ai: A quick way to test if your page shows up in citations

- PromptLayer: Track prompt‑response pairs while you iterate wording

Distribution Tactics

- Cross‑post slides to SlideShare and link them back to improve your search engine optimization.

- Upload CSV datasets to Hugging Face Datasets for improved access by AI researchers and large language models.

- Publish video explainers; YouTube transcripts are vacuumed into models, enhancing LLM outputs and improving AI optimization.

- List whitepapers on Google Scholar for extra credibility

Real Example: Turning a Google Winner into an AI Citation

Before: A 2024 blog, “Best SaaS Project Management Tools,” ranked page one on Google but never surfaced in ChatGPT answers.

After:

- Added a table comparing setup time, integrations and pricing.

- Inserted our own survey stat (62 percent of PM leads prefer ClickUp over Asana).

- Posted an HTML‑only summary on Notion and a PDF on SlideShare.

What This Means for CMOs and Marketing Managers

Making the Case Internally

Show the board that AI citations drive incremental, high‑intent traffic. Adobe’s data says AI‑referred users view 12 percent more pages and bounce 23 percent less than classic search visitors. That’s a revenue argument, not a vanity metric, especially when considering the future of SEO.

Objection Handling

“Won’t this cannibalize SEO?” Nope. You’ll still rank on Google, plus you’ll capture the queries that never hit Google at all. LLMO optimization is additive.

Frequently Asked Questions about LLMO Optimization

What’s the quickest way to tell if my content already ranks in ChatGPT answers?

Drop the main keyword plus your brand name into Perplexity.ai and ChatGPT. If the model pulls a snippet from your site or links to your domain, you’re in the training data. No citation means you need stronger LLMO optimization.

Does LLMO optimization replace traditional SEO?

No. Think of it as a layer on top. Traditional SEO still drives Google traffic, while LLMO optimization boosts visibility in AI answers. Together, they widen your reach.

How often should I refresh content for AI models?

Aim for quarterly updates to align with SEO and LLMO strategies. Retrieval‑augmented systems like Perplexity recrawl the web every few weeks, and model snapshots such as GPT‑4o refresh roughly every three to six months to optimize for LLMs. Fresh stats and clear dates help the model pick your version over stale sources, enhancing your SEO and LLM outputs.

What’s the best tool stack for beginners?

Start simple: Implement SEO strategies that align with generative AI systems to improve visibility in search queries.

- Surfer SEO for NLP keyword coverage.

- AnswerSocrates for question mining.

- Perplexity.ai for citation testing.

- The notion of GitHub Pages for SEO and LLM outputs to host lightweight HTML summaries that LLMs crawl easily.

How do I convince stakeholders that LLMO delivers ROI?

Show engagement metrics. AI‑referred visitors usually bounce less and view more pages. Add a KPI for “AI citations gained” alongside organic sessions and backlink count to prove incremental value.

Are there risks to over‑optimizing AI models?

Yes, especially when considering AI optimization. If you chase the model too hard, stuffing the article with repetitive phrases or stripping out human context, you’ll hurt user experience and possibly trigger spam filters, impacting your SEO strategies. Keep the reader first and use LLMO optimization as a seasoning, not the entire recipe.

Conclusion: From Google to Generative A Strategic Evolution

Search isn’t dying, but it is mutating into a more sophisticated landscape influenced by traditional search engines and LLM optimization. LLMO optimization positions your brand where the conversation is already happening inside AI chatbots and generative answer engines, improving engagement through natural language processing. Nail the checklist, syndicate your data, and speak in crisp, quotable sentences. Do that, and the next time someone asks ChatGPT for “the best AI‑savvy marketing agency,” the model will already know your name.