Learn how to replace dopamine-driven vibe coding with structured, board-approved context engineering workflows that reduce AI code defects and boost ROI.

Why Vibe Coding Failed the CFO Test

The Dopamine Trap: Instant Gratification vs. Maintainability

Vibe coding had a moment. Engineers loved the instant code generation, the rapid-fire dopamine hits from seeing something work in seconds. But once the buzz wore off, something else surfaced: AI code that couldn’t scale. According to a 2024 report, 76.4% of developers said they wouldn’t trust AI coding tools without manual review. It’s not just about productivity; it’s about maintainability and real outcomes.

What vibe coding gave us in speed, it took back in reliability. These prototype snippets often ended up in production, riddled with edge case bugs, misaligned logic, and undocumented magic. And when the CFO asks why your last sprint ended in a $300k rollback, you can’t shrug and say, “The AI model hallucinated.”

The Cost of Unseen Debt

One CTO in a Slack channel summed it up best: “We had to rewrite 40% of our vibe-coded POCs after Series C. It was dead weight.” These aren’t just anecdotal grievances. The real issue is technical debt disguised as progress. AI code without context multiplies misfires, especially in regulated industries like fintech or healthcare, where a single mistake can lead to outages or fines.

What Is Context Engineering? (Exec-Ready Explainer)

It’s Not Just Prompting

Prompting is just the tip. Context engineering is about feeding structured, relevant, persistent information into AI coding tools to reduce hallucinations, improve code quality, and align outputs with enterprise objectives. Think of it as the operating system beneath your AI assistant. Instead of relying on one-shot prompts, you’re setting guardrails with things like:

- Persistent memory

- Retrieval-augmented generation (RAG)

- Formal documentation context

- System-level rules

This structured context doesn’t just reduce AI code defects. It actually makes AI coding usable for enterprise software development at scale.

Capability Over Tools: What the Experts Say

Karpathy once said, “Prompting is to LLMs what regex was to search.” It’s clever, but crude. Teams at Shopify and DoNotPay are moving beyond clever prompts to simulation-based PRP systems that define exact business logic and test for edge cases pre-deployment.

They’re not throwing prompts at a LLM defect cost models and hoping for the best. They’re building stateful AI agent systems that are measurable, repeatable, and defensible.

Quantifying the Cost of Hallucination

The Formula

Here’s how to calculate the true cost of AI model hallucinations:

Defect Cost = (Number of Bugs x Mean Time To Resolve x Dev Cost per Hour) + Reputation Risk

If your devs spend 8 hours a week fixing AI-generated bugs at $150/hr, that’s $62,400/year just in the cleanup. And that doesn’t even count lost customer trust or SLA penalties.

Real-World Example: Fintech API Miswiring

A fintech firm wired a payout API incorrectly due to a hallucinated method name. It wasn’t caught during review and triggered a four-hour outage that cost $180k in refunds and partner penalties. That’s one bug, one API, one misfire. And it wasn’t some junior engineer’s fault; it was the AI system’s.

The PRP-Driven Workflow and Where Savings Occur

Anatomy of a PRP (Product-Requirements Prompt)

A prompt isn’t just another prompt. It’s a structured, Claude-friendly template that includes:

- Expected outcome

- Business rule

- Known constraints

- Failure edge cases

This clarity helps the AI model generate deterministic outputs and makes it easier for QA to verify behavior.

The 5 Cost-Saving Levers

Context engineering delivers savings you can actually quantify:

- Rework dropped 38% in one health-tech case

- Review times cut by 52% thanks to cleaner first drafts

- Security incident likelihood dropped by 60%

- Test coverage improved due to explicit failure cases in PRPS

- Developer morale improved by reducing cleanup fatigue

ROI Calculator Walk-Through

What Execs Already Track

Executives already track KPIs like escaped defect cost, dev hours burned per incident, and deployment velocity. This calculator takes those familiar metrics and reframes them through the lens of AI coding risk. Plug in:

- Number of monthly hallucination incidents

- Average developer hourly rate

- Average time to resolution per incident (MTTR)

- Risk-adjusted reputation impact

It’s designed to show not just the pain, but the upside. A shift to structured prompts and PRP workflows can show clear, spreadsheet-level savings.

Sample Scenario: $2.4M Annual Benefit

Let’s say you deal with 35 hallucinated bugs a month. If each takes 3 hours to resolve at $150/hr, that’s $189,000/year. Add QA retesting time, customer support involvement, and risk exposure, and your annual burn hits over $2.4M. When context engineering cuts that by even 50%, the payback window is under 6 months.

Board-Level KPI Set for Context Engineering

Context Coverage Ratio (CCR)

CCR measures how much of your software development is influenced by context-aware AI systems. The formula:

CCR = (Total PRP-backed Commits / Total AI-generated Commits) x 100

High CCR signals a lower risk of hallucination and better audit readiness. A healthy engineering organization should target a CCR of 70%+.

Hallucination Rate per 1k Lines of Code

This metric tracks how many defects from AI code make it into QA or production. It helps execs prioritize investments. A hallucination rate above 5 per 1k LoC in critical systems? Red flag.

Dev Velocity Uplift vs Baseline

After rolling out PRP workflows, compare the sprint throughput and lead time. Look for improvements in:

- Time to merge

- Time to fix

- Reviewer confidence scores

When you can ship faster and fix less, the roi of AI jumps.

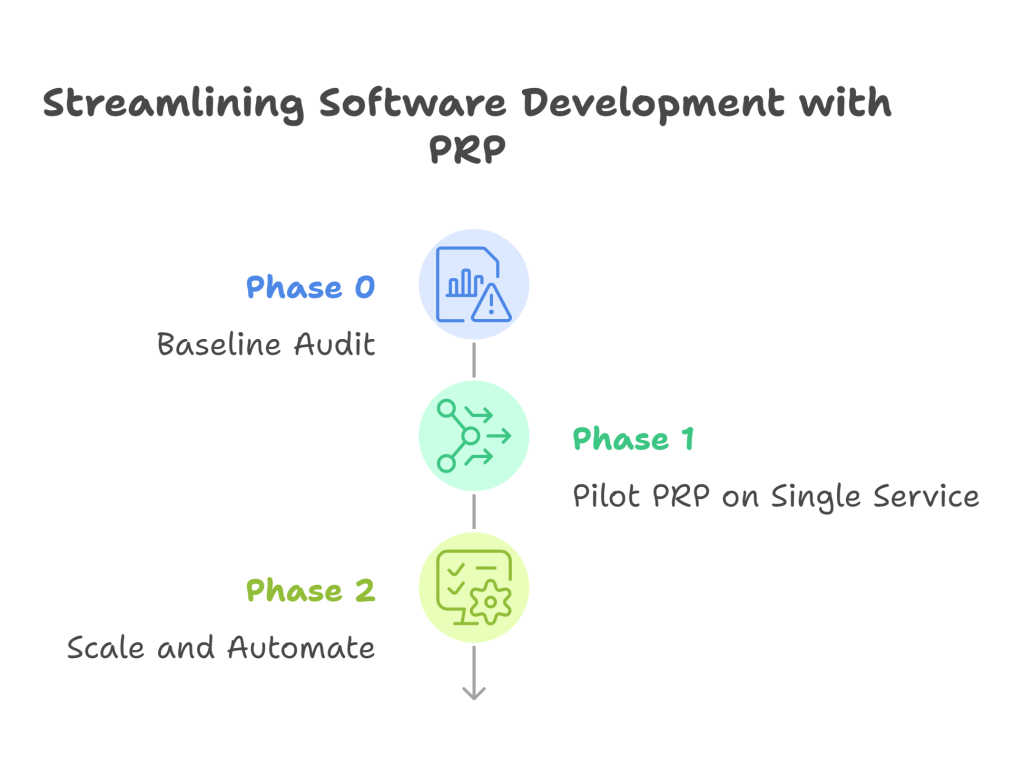

Implementation Roadmap (90-Day Plan)

Phase 0: Baseline Audit

- Review defect logs, pr review cycles, and QA incident logs

- Flag vibe-coded legacy risk zones

Phase 1: Pilot PRP on Single Service

- Choose one low-risk service (e.g., internal admin panel)

- Track metrics weekly: defect rate, review time, cycle time

Phase 2: Scale and Automate

- Use slash commands to generate props in Claude

- Build governance via AI tagging in GitHub workflows

- Automate output scoring before the merge

Risk and Compliance Lens

Injection and Poisoning Controls

As AI systems touch more sensitive workflows, so do attack surfaces. Here’s what helps:

- Use prompt-scanning filters to detect suspicious patterns

- Validate prompt sources with versioned PRP metadata

- Build output verification checks into the staging

These techniques help detect and prevent prompt injection or context poisoning in real-time.

Secure-by-Design Context Patterns

Security teams need to know that context engineering isn’t a loophole; it’s a framework. Build with:

- Encrypted context stores for RAG systems

- Role-based access to PRP templates

- Isolation environments for fine-tuning or instruction preload

Compliance auditors look for traceability. PRP logs, structured prompt IDs, and AI audit trails are your best allies

Executive FAQ

Is this more overhead for devs?

No. PRPS reduces the time spent on review and post-deploy fixes.

Is this ISO/SOC compliant?

Yes. PRPs offer a prompt trial that’s auditable.

Can this scale?

Absolutely. Each PRP is modular and service-specific. The rollout is gradual.

Conclusion

Context engineering is no longer just a developer trick; it’s the executive answer to AI risk, ROI pressure, and the accountability gap left by vibe coding.

If vibe coding was about speed, the rise of context engineering is about scale. If vibe coding chased demos, context engineering earns deployment. And if vibe coding made your board nervous, context engineering gives them metrics, safeguards, and a language they understand: cost, risk, and return on investment.

This isn’t about slowing things down. It’s about removing the guesswork. Structured PRPs, context-aware workflows, and audit-ready logs transform AI from a wildcard into a reliable, board-ready asset. One that reduces rework speeds up secure delivery, and directly impacts your bottom line.