It is no longer limited to tech forums to discuss AI-generated content. These days, the same question is being posed by regulators, social media companies, and brands: Should all forms of artificially generated content be classified as such? Leaders contend that trust is preserved when AI-generated content is transparently labeled.

Others worry that an AI label may confuse readers or hurt reach. This article gives you a clear approach to labeling AI-generated content across blogs, video, image, and other media so your brand stays compliant and builds credibility.

When to Add an AI Label to Content

It quickly becomes difficult to distinguish between “AI-generated content” and “AI-assisted” content. In order to determine when an AI label is necessary, brands need a straightforward framework for making decisions. Consider it a trust signal rather than a legal checkbox.

Here’s the rule of thumb: Label AI-generated content whenever the use of AI changes the nature of the media in a way that could mislead a reasonable person.

1. Fully AI-Generated Content and Why Transparency Builds Trust

If an article, image, or video was created using AI from scratch, and there was no significant human co-creation, it should be clearly labeled as AI-generated content.

Example: An entire blog post drafted by ChatGPT with minimal edits, or a video voiceover produced by a synthetic voice clone.

2. Materially Altered Synthetic Content

If AI tools were used to generate or manipulate content types like human faces, places, or voices in a way that looks authentic, a transparent labeling AI-generated content note is mandatory.

Example: Even though the brand controls the script, AI can replicate a person’s voice or face in a product demo video.

3. Label AI-Generated Content When Authenticity Could Be Misleading

Content labels are not necessary for AI-powered spellcheckers, grammar corrections, or headline recommendations. Authenticity is not altered by these edits, which produce fake content.

But if the AI tool adds new facts, generates AI-generated text sections, or creates artificially generated content like stock-style imagery, a label AI-generated content note helps avoid confusion.

4. If in Doubt, Disclose

Regulators like the FTC focus on deception, not whether you used an AI tool. If the content could be misunderstood as purely human-created when, in fact, it was generated content, it should be labeled as AI-generated.

Platform Policy Updates and Current AI Act Regulations

Google’s Content Labels and AI-Generated Content Policy

Google has made clear how it will categorize content produced by artificial intelligence. Search systems focus on experience, expertise, authority, and trust. That means disclosure doesn’t hurt rankings. What matters is whether the AI-generated content is thin, spammy, or manipulative. Content provenance and authenticity signals, like bylines, credentials, and citations, outweigh whether the post used an AI tool.

TikTok AI Label Requirements for Synthetic Media

Now, TikTok requires creators to disclose synth AI-generated content and automatically labels AI-generated media. This action establishes standards for social media sites. An AI-generated voice, visually appealing imagery, or text overlays are all features of AI-generated video. To maintain compliance, include an AI-generated content note in the captions.

YouTube Content Creation and AI Labeling Rules

Under YouTube’s new policy, videos that include artificial intelligence (AI)-generated content that looks real must be disclosed. AI-generated content labeling could include content labels in the video description and on-screen disclaimers for the first few seconds. This labeling strategy complies with more general rules for trust and transparency.

FTC Policy on Deception, AI Act Enforcement, and Trust Signals

The FTC has warned that disclosing AI-generated content is required when failure to do so would mislead. From deepfake disclosure requirements to synthetic endorsements, the agency is focused on content authenticity and honesty. Misleading content created using AI could violate the FTC Act.

EU AI Act and Global Rules

The AI Act introduces requirements for disclosing AI-generated text, images, or synthetic media in certain use cases. Regulations push brands toward transparent labeling and encourage the use of tools like the Coalition for Content Provenance and Authenticity Standards.

Practical Playbook for Labeling AI-Generated Content

Now that you know when AI-generated content should be labeled, let’s break down how to actually do it. This is where many teams stumble, not because they’re hiding usage, but because there’s no shared playbook.

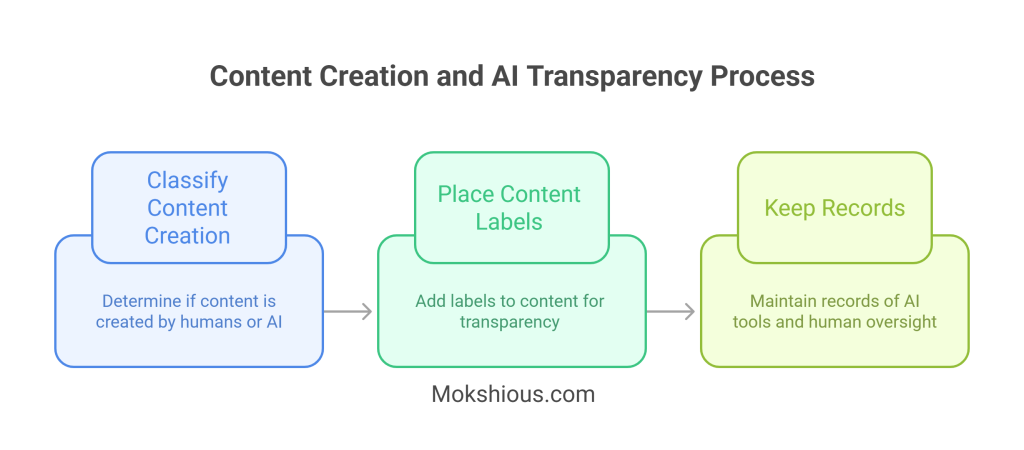

Step 1: Classify Content Creation, Human vs Generative AI

Think of content in three clear buckets:

- AI-assisted content (grammar checks, outline refinements, alt text)

- Labels for public content are unnecessary.

- Keep internal records for governance and consistency.

- AI-coauthored content (drafting sections, summarizing data, generating facts)

- Include a brief example of an AI disclaimer, such as “Our team edited this article after it was drafted with AI assistance.”

- conveying authenticity without sacrificing trustworthiness.

- AI-synthetic or realistic media (faces, voices, environments, manipulated content that looks real)

- Must include prominent AI content labels in the media itself and in accompanying text.

- Example: “Contains AI-generated images” or “Voice in this video is synthetic.”

This classification step helps content creators make quick, consistent decisions.

Step 2: Where to Place Content Labels for Transparency

Where you put the label matters almost as much as adding it.

Blog/Web Content

- Inline note near the byline or in the footer.

- Example: “This article contains AI-generated text and has been fact-checked by our editorial team.”

Social Media Platforms

- Short, clear labels in captions.

- Example: “Contains AI-generated visuals” for TikTok, Instagram, or LinkedIn posts.

Video Content

- On-screen slug for the first 5–10 seconds, plus a second disclosure in the description.

- Example: “Voiceover created using AI-generated material.”

Image Content

- Caption note + alt text with content provenance and authenticity information.

- For higher trust, embed provenance metadata using C2PA or Content Credentials so the label travels with the image.

Step 3: Keep Records of AI Tools and Human Oversight

This is where most teams fall short. Labels are great, but if you can’t back them up, you risk compliance headaches later.

- Log everything: which AI tool was used, prompts, model versions, human editors, and sources.

- Version control: save drafts and final content generated to show human oversight.

- Audit trails: if regulators, platforms, or customers ask, “How was this created using AI?” you can answer with clarity.

Governance and Workflow for AI Label Policy

A clear governance framework is the backbone of consistent AI-generated content labeling. Without it, teams fall into the trap of ad hoc decisions, one editor adds a label, another forgets, and soon your brand risks inconsistent messaging or worse, a regulatory strike.

Here’s how to build a governance and workflow system that works in real life:

One-Page Policy Framework for Labeling AI-Generated Content

- Define exactly what qualifies as AI-assisted, AI-coauthored, and AI-synthetic data.

- Spell out requirements for content labels, disclosure language, and placement for each content type (blog, image, video, social).

- Include your employee AI usage policy, so everyone in the organization follows the same guardrails.

Human Review Checklist to Ensure Transparency and Trust

- No matter how advanced generative AI gets, human oversight is non-negotiable.

- Add a QA checklist that covers: fact-checking, bias detection, tone consistency, IP rights, and labeling accuracy.

- Encourage editors to ask: “Could this piece of content be mistaken for human-only work if I don’t label it?” If yes, it needs an AI label.

Using C2PA for Image Provenance and Content Creation Proof

- Tools like C2PA and Content Credentials embed a credential directly in the media file. That means the provenance of AI-generated material stays intact as the content moves across social media platforms, DAM systems, and even third-party sites.

- For sensitive industries (finance, health, politics), embedding content provenance and authenticity signals can protect you from accusations of misleading content.

Vendor Requirements for AI Label and Disclosure Policy

- If you outsource to agencies or freelancers, require them to follow the same governance framework. Contracts should mandate disclosing AI-generated content and embedding provenance metadata whenever possible.

This governance workflow not only keeps your team aligned but also provides a defensible framework if your brand ever faces scrutiny under the AI Act or new FTC guidance.

Measure and Iterate on AI Content Labels

Governance is only half the battle. To make sure your labeling strategy works in practice, you need to measure and iterate. Treat labeling AI-generated content like you would a marketing campaign, track the results, and refine.

Monitor Audience Responses to AI Content Labels

- Track mentions of “AI” or “robotic” in customer complaints or feedback.

- Watch for patterns: do users trust labeled posts more? Do unlabeled posts get flagged?

Measure Performance Impact

- Look at time on page, CTR, and watch time for content containing AI-generated labels.

- A/B test different phrasings of labels. For example, “ai-assisted” may sound more human-friendly than “ai-generated,” while still meeting disclosure requirements.

Track Compliance Metrics

- Keep a running metric of what percentage of your published posts have proper content labels.

- Aim for 100% accuracy in labeling AI-generated content across all content types.

Adjust Based on Platform Changes

- Policies from TikTok, YouTube, and Google evolve quickly. Build in a quarterly review to align your governance with new AI content labels or disclosure requirements.

By measuring both user trust and operational compliance, you can refine your content governance framework into something that boosts brand trust rather than feeling like red tape.

Conclusion: Why Transparency in Labeling AI-Generated Content Builds Long-Term Trust

The rise of generative AI has completely changed how brands approach content creation. But with that speed comes responsibility. Clear and consistent labeling of AI-generated content is no longer just a nice-to-have; it’s a requirement for building transparency and trust. Audiences are increasingly aware of synthetic content, and social media platforms, along with regulators, are setting stronger policies to ensure honesty.

Adding an AI label doesn’t diminish the value of your work. Instead, it signals that your brand has nothing to hide. When you openly label AI-generated images, video, or text, you show respect for the people consuming your content. That honesty protects against accusations of misleading content and positions your company as proactive rather than reactive when new AI act rules or FTC policies come into play.