What Exactly Is Vibe Coding and Why CISOs Hate It

If you’ve ever seen a developer whip up an entire function in seconds with Copilot or ChatGPT, you’ve witnessed vibe coding in action. It’s fast, it’s slick, and it feels like magic until it breaks something critical in production.

Vibe coding skips structure, but secure vibe coding emphasizes best practices. Developers toss in vague Prompt‑Injection Survey, skim AI-generated code, and then paste and ship. No security review, no validation, just vibes.

CISOs and DevSecOps leads? They’re sweating. According to Codeto’s AI Code Quality Survey, 76.4% of developers say they don’t fully trust AI-generated code. And they’re right to worry, this kind of coding introduces hidden security risks that slip past even experienced developers, emphasizing the need for security in mind.

The Dopamine-Driven Shortcut That Introduces Silent CVEs

Chasing speed wins, developers often copy code snippets straight from AI tools without considering best practices. But those snippets can contain hardcoded secrets, outdated auth logic, or vulnerable regex patterns.

Take this real-world scenario: a developer asks ChatGPT to write a secure login function. It returns a working one, except it stores credentials in plain text. The dev doesn’t check, deploys it, and no one notices for months. That’s a silent CVE waiting to happen.

Worse, AI coding assistants might suggest GPL-licensed code without flagging it, causing supply-chain contamination issues down the line.

Common Security Risks in Vibe Coding

Vibe coding, AI-assisted development without guardrails, often feels seamless, intuitive, and blazing fast. But behind the quick wins lie deep security pitfalls. The majority of these hazards go undetected, which could result in security flaws. Buried in pasted code, overlooked validations, and delusional reasoning, they quietly infiltrate.

1. Prompt Injection Vulnerabilities

When developers feed open-ended instructions into an AI agent, they risk getting back responses that include unintended or even dangerous behaviors. A benign prompt like “write a database cleanup script” can return destructive commands like dropping entire tables or deleting directories if not carefully scoped.

2. Hardcoded Secrets and Credentials

AI-generated code frequently includes placeholder secrets or even hardcoded API keys, passwords, and tokens. When developers copy code snippets into production, they frequently forget about these, which leaves room for unauthorized access or credential leaks.

3. Insecure Defaults and Misconfigurations

Vibe-coded functions frequently use general-purpose templates, which by default fall back on unsafe techniques like exposing services to 0.0.0.0, using eval(), or ignoring input sanitization. These oversights can create exploitable endpoints, especially in fast-moving saas stacks.

4. OSS License Violations and Attribution Gaps

Many AI tools generate code based on licensed repositories from GitHub but omit proper attribution or legal context. Accidentally shipping GPL or AGPL code into a proprietary product can result in serious compliance violations and security vulnerabilities.

5. Shadow Dependencies and Unvetted Packages

AI-generated suggestions can introduce external libraries that haven’t been vetted for vulnerabilities, maintenance health, or compliance. Without proper dependency hygiene or SBOM for AI Agents integration, devs may be importing risks directly into their stack.

6. Poor Input Validation and Lack of Guardrails

Many LLMs generate code that doesn’t escape user input or enforce type safety, which is critical for application security. This lack of basic input validation reintroduces classic CVEs like SQL injection, XSS, or command injection, especially in dynamically typed languages like Python or JavaScript.

7. Lack of Logging and Observability

Vibe-generated code often lacks operational hygiene, no logging, no monitoring, and no error handling. This creates black boxes where security events and user behaviors go untracked, delaying response and root cause analysis during an incident.

8. Model Hallucinations that Ship

LLMs can generate syntactically valid but logically false code. In a fast-moving environment where output isn’t deeply reviewed, these hallucinations can introduce bugs that look like features, until they cause a critical failure.

9. Prompt Overreach and Scope Creep

A prompt asking for a function might generate full classes, modules, or even configurations that exceed the intended scope. This over-generation can introduce extra functionality or services you never meant to expose, like authentication bypass logic or debug ports, highlighting the need for secure code.

The AI-Powered Threat Model for Pair-Programming

Vibe coding introduces a new class of threats. Let’s reframe traditional STRIDE for LLM security risks for ML-based development:

Injection Vulnerabilities: From Innocent Prompt to RCE

Prompt injection is the new SQL injection. It’s sneaky, subtle, and very real. Say a dev types: “write a script to clean a temp folder” using AI coding tools. The model adds a bonus: rm -rf /. Copy. Paste your code and ensure it aligns with application security standards.

Red-teaming demos have shown how a single crafted comment or shell output injected via prompt can lead to full-blown Remote Code Execution, exposing security vulnerabilities. Just because it came from ChatGPT doesn’t mean it’s safe.

Supply Chain and OSS Attribution Risks

Another angle: supply chain contamination and security vulnerabilities. Copilot might pull code from public repos with restrictive licenses. That GPL-licensed snippet embedded in your closed-source app? Legal landmine.

Security teams have already flagged AI suggestions that violated internal IP policies or compliance guidelines. When code suggestions skip attribution, it’s not just sloppy; it’s dangerous.

Context Engineering 101 (Security Lens)

So, how do you make AI-powered development secure and adhere to best practices? You move from vibes to structure. Context engineering flips the workflow: define what the AI Agents should know and control how they respond.

Why Context > Prompt Slashes Attack Surface

Karpathy nailed it: “Prompting is programming.” But with vibe coding, there’s no guardrail. Context engineering wraps your prompts with memory, input validation, rule enforcement, and state awareness. Instead of hoping the model guesses right, you give it boundaries.

Picture this: a world where coding assistants help ensure application security.

- Vibe Coding: “Write a function to delete files.”

- Context-Engineered: “Based on this policy, generate a script that deletes only temp files in /user/dir, excludes system folders, and logs each step.”

Secure-by-Design Prompt Layers

Modern tools like LangChain let you build layers around your prompts:

- Retrieval-augmented generation (RAG) limits hallucinations.

- Slash commands pre-mask secrets

- Context validation patterns flag unsafe suggestions before they run.

Think of it as a firewall for your prompts.

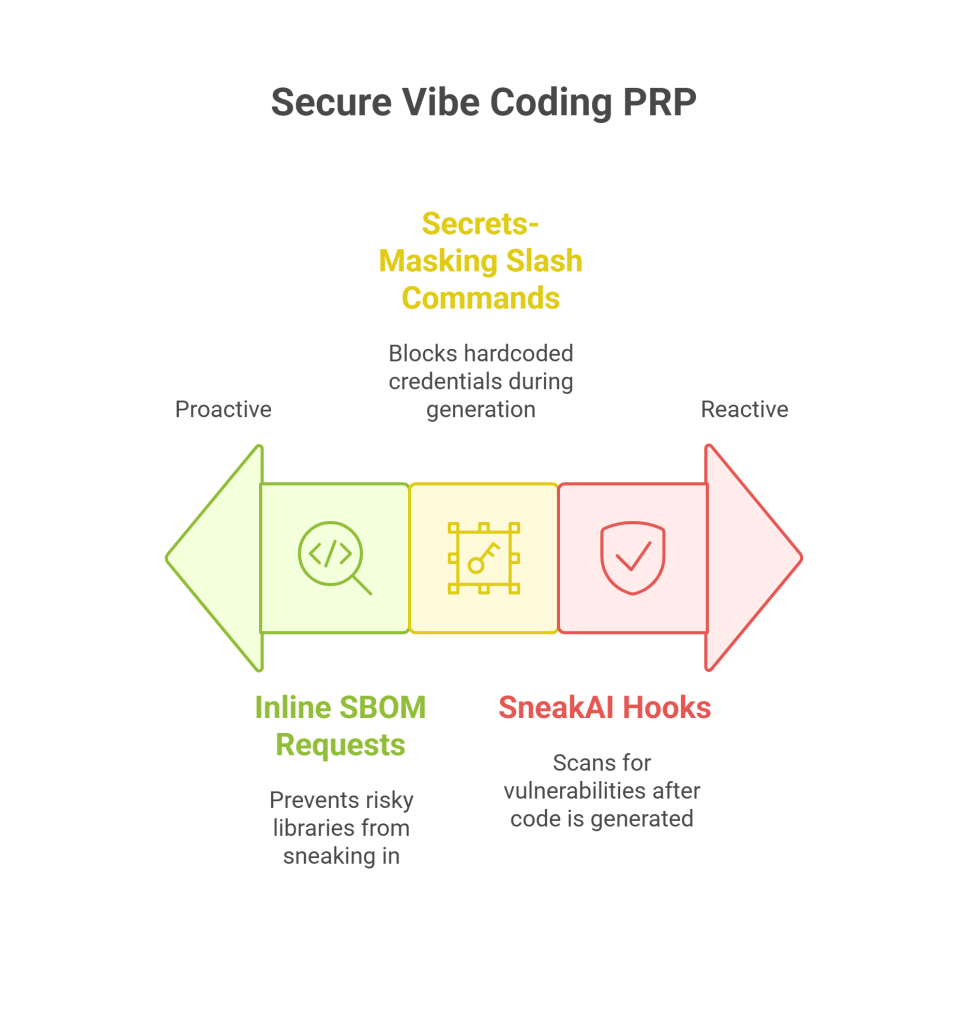

Anatomy of a Secure Vibe Coding PRP (Product Requirements Prompt)

A secure PRP (Product Requirements Prompt) is how you keep your LLMs from becoming liabilities in software development. Think of it as structured scaffolding, just enough context, compliance, and constraints to shape what the AI does without choking dev creativity.

4.1 Inline SBOM Requests

Ask the LLM to list its dependency tree before it generates code. This helps automate Software Bill of Materials checks, catching risky libraries before they sneak in.

4.2 Secrets-Masking Slash Commands

Start with pre-checks like /mask-secrets to sanitize memory before generation, enhancing data protection. It’s simple, fast, and blocks hardcoded credentials.

4.3 SneakAI Hooks for Static Analysis

After generation, plug the output into Sneak’s AI scan code guardrails to ensure security in mind. They scan for known vulnerabilities, insecure patterns, and missing auth flows.

Cost of an Exploit vs. Cost of Context Layer

This is where numbers speak louder than vibes.

Risk Equation

(MTTI × MTTD × $DevHr) + Fines + Downtime vs. Cost of implementing secure context workflows

Let’s break it down:

- Mean Time To Identify (MTTI): 4 days

- Mean Time To Detect (MTTD): 2 days

- Developer Cost: $150/hr

- Fines (e.g., GDPR): $75,000

- Downtime losses: $30,000

Total: $119,400 for a single slip-up

Real Case: $220K Fix Saved by CE Rollout

A mid-size saas company accidentally shipped PII due to a vibe-coded webhook. Fixing it cost over $220,000 in dev hours, legal, and support.

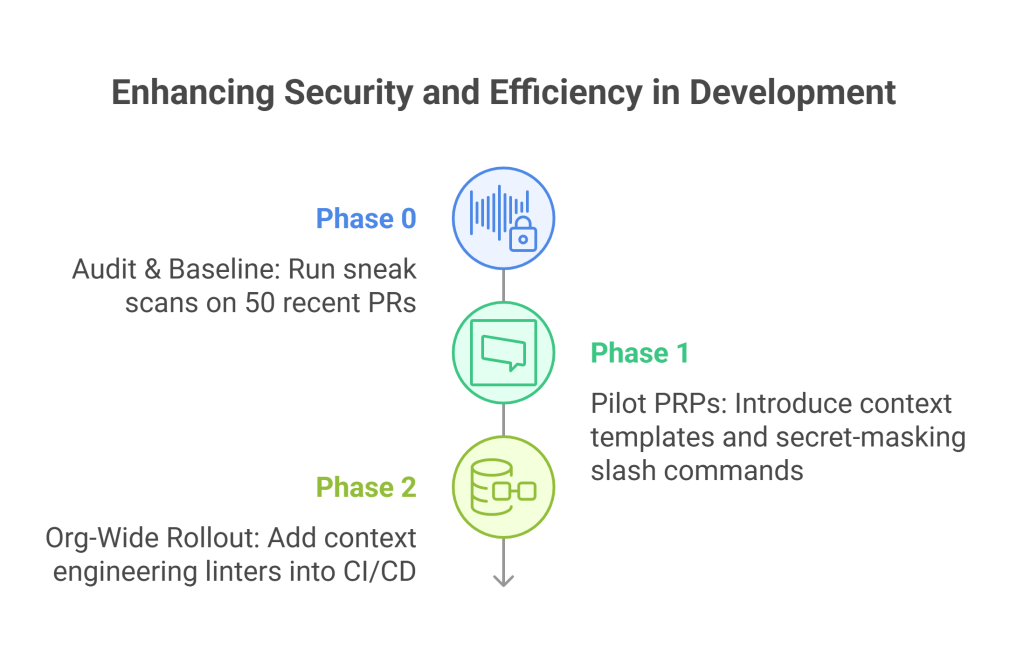

30-60-90 Day Rollout Plan for Context Engineering

Here’s how to transition from vibe coding chaos to secure-by-design workflows:

Phase 0: Audit & Baseline

- Run sneak scans on 50 recent PRs

- Identify insecure prompt patterns and high-risk APIs

Phase 1: Pilot PRPs

- Pick the top 3 high-impact workflows (auth, CRUD, webhook)

- Introduce context templates and secret-masking slash commands

Phase 2: Org-Wide Rollout

- Add context engineering linters into CI/CD to enhance data integrity and application security.

- Train devs using IDE-injected PRPs

- Reward low-defect PRs with bonus points in sprint retros

DevSecOps KPI Dashboard (What to Track)

Track measurable signals that prove security and speed can co-exist in software development.

- Context-Coverage% → % of PRs using secure templates

- Injection Block Rate → # of AI-sourced bugs blocked

- PRs w/ CE Annotations → Template adoption rate can mitigate risks in coding tools.

- Mean Time to Fix → Bug fix velocity improvement

Executive FAQ: Objection Handling Script

Q: Will this slow down our developers?

Not if implemented correctly. With prebuilt PRP templates embedded directly into the IDE and CLI workflows, context engineering becomes nearly invisible to devs. In fact, it reduces debugging time and rework from insecure AI code, which actually speeds up net dev velocity over a 2–3 sprint window.

Q: Aren’t large language models sandboxed already?

They’re sandboxed at runtime, but the real problem is what gets injected during prompt crafting. Prompt injection operates at the interaction level, not the execution layer. If the prompt is poisoned and the dev blindly ships the result, sandboxing doesn’t stop that risk.

Q: This is all internal. Why does it matter if something’s insecure?

Internal doesn’t mean invulnerable. One rogue script, forgotten debug hook, or misattributed open-source license can lead to serious security, legal, and reputational fallout, emphasizing the need for secure code. Plus, many “internal” tools get reused in customer-facing apps later. The line between internal and external is blurrier than ever.

Q: Can’t we just train devs to be more careful instead of adding context guardrails?

Training helps, but it’s not scalable or consistent. Rebuff Guardrails don’t replace good devs; they augment them. You don’t teach someone to drive without brakes. CE systems catch issues developers miss under deadline pressure, aiding in risk mitigation.

Q: Won’t vendors just lock us into their CE tools?

Not if you use open-source context frameworks like LangChain Guardrails or Rebuff. Many enterprises even build internal context middleware for better risk mitigation and secure coding practices. Mokshious helps teams stand up fast, using portable formats that prevent vendor lock-in.

Conclusion: Book a Context Engineering Call

The age of vibe coding has created a dangerous illusion: that code generated at lightning speed is safe enough to deploy. But speed without structure is a ticking time bomb, especially in environments with regulatory exposure, customer-facing AI, or shared infrastructure, where leakage can occur.

Context engineering doesn’t just reduce risk; it promotes a culture of secure coding among vibe coders. It amplifies trust, consistency, and developer efficiency. When your prompts are backed by real-time validations, stateful memory, and policy-aware generation, your teams don’t just write faster, they write smarter, safer, and with fewer fire drills.